Over 1500 researchers across multiple fields have banded together to openly reject the use of technology to predict crime, arguing it would reproduce injustices and cause real harm.

The Coaltition for Critical Technology wrote an open letter to Springer Verlag in Germany to express their grave concerns about a newly developed automated facial recognition software that a group of scientistts from Harrisburg Univeristy, Pennsylvania have developed.

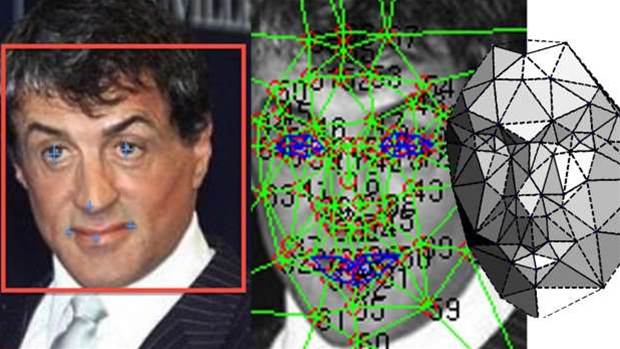

Springer's Nature Research Book Series intends to publish an article by the Harrisburg scientists named A Deep Neural Network Model to Predict Criminality Using Image Processing.

The coalition wants the publication of the study - and others in similar vein - to be rescinded, arguing the paper makes claims that are based unsound scientific premises, research and methods.

Developed by a New York Police Department veteran and PhD student Jonathan Korn along with professors Nathaniel Ashby and Roozbeh Sadeghian, the Harrisburg University researchers' software claims 80 per cent accuracy and no racial bias.

According to the Harrisburg University researchers, the automated facial recognition technology reduces implicit biases and emotional responses for crime prevention, law enforcement and military applications.

"The software can predict if someone is a criminal based solely on a picture of their face," the Harrisburg University researchers claimed.

Automated systems that sift through human populations looking for individuals that are likely to commit crime in the future, and apprehending and punishing them before the illlicit activities take place, were predicted by authors George Orwell and Philip K Dick in the 1940s and 1950s.

Crime prediction technology was popularised in Minority Report, a 2002 movie based on the similarly named short story by Dick.

With accelerating uptake of increasingly capable and powerful neural networks for machine learning and artificial intelligence, so-called "pre-crime" systems are now possible.

However, despite the promise of less biased crime prevention through machine learning based on practices claimed to be fair, unbiased, accountable and transparent, the coalition of scientists sounded the alarm, arguing the techology is anything but.

"Let’s be clear: there is no way to develop a system that can predict or identify “criminality” that is not racially biased - because the category of “criminality” itself is racially biased," the coalition of scientists said.

Data generated by the criminal justice system cannot be used to "identify criminals" or to predict their behaviour, as it lacks context and causaility, and can be fundamentally misinterpreted, they argued.

In addition, people of colour are treated more harshly by the legal system at every stage than white people in the same situation, countless studies have shown.

This inherent bias in the justice system causes serious distortions in the data, the coalition of scientists said.

There is insufficient training and incentives for machine learning scholars to acquire the skills necessary to understand the cultural logics and implicit assumptions that underpin their models, which has created a crisis of validity in AI.

Less than two years ago, Amazon was forced to stop using a secret AI recruitment engine after machine learning specialists discovered the algorithm did not like women and selected male applicants instead.

Machine learning must move beyond computer science, the coalition said.

Ignoring inherent and at times inadvertent biases built into machine learning risks developing crime prevention technology that reproduces injustices and causes real harm, the scientists added.

_(23).jpg&h=140&w=231&c=1&s=0)

_(33).jpg&h=140&w=231&c=1&s=0)

_(28).jpg&h=140&w=231&c=1&s=0)

iTnews Executive Retreat - Security Leaders Edition

iTnews Executive Retreat - Security Leaders Edition

iTnews Benchmark Awards 2026

iTnews Benchmark Awards 2026

iTnews Cloud Covered Breakfast Summit

iTnews Cloud Covered Breakfast Summit

The 2026 iAwards

The 2026 iAwards

_(1).jpg&h=140&w=231&c=1&s=0)