IBM researchers claim to have developed a distributed deep learning (DDL) platform with multiple servers that speeds up neural network model training considerably and improves accuracy.

They say [pdf] they trained the Resnet neural network model with 101 layers on the ImageNet 22K image data set, which contains over 7.5 million high-resolution images in 22,000 categories, spanning several terabytes in size.

Compared to earlier training tests by Microsoft which took ten days and scored below 30 percent accuracy, the IBM researchers say they got the neural network model up to 33.8 percent validation accuracy in roughly seven hours.

Using the Berkeley University Caffe deep learning framework, the researchers achieved 95 percent scaling efficiency.

Facebook's artificial intelligence research group had earlier recorded 89 percent scaling efficiency on a Caffe training run on a 256-GPU platform, but with higher communications overhead than the IBM platform.

This, IBM claims, is a new record.

"These results are on a benchmark designed to test deep learning algorithms and systems to the extreme, so while 33.8 percent might not sound like a lot, it’s a result that is noticeably higher than prior publications," IBM reserch fellow Hillery Hunter said.

IBM's technology can be used to train AI for specific tasks, such as detecting cancer cells in medical images more accurately and quickly.

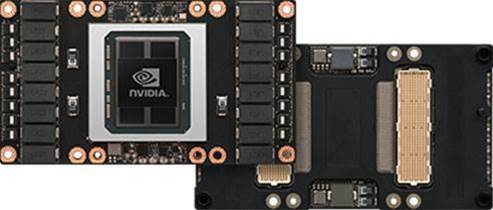

The researchers used 64 of IBM's Power System "Minsky" S822LC servers for the neural network. These featured four Nvidia Tesla P100 graphics cards as well as IBM's Power8 processors.

Implemented as a library, IBM's communication algorithm with a multi-ring pattern can be used with Google's TensorFlow and the Torch scientific computing framework.

_(23).jpg&h=140&w=231&c=1&s=0)

.png&h=140&w=231&c=1&s=0)

_(22).jpg&h=140&w=231&c=1&s=0)

_(26).jpg&w=100&c=1&s=0)

iTnews Executive Retreat - Security Leaders Edition

iTnews Executive Retreat - Security Leaders Edition

_(1).jpg&h=140&w=231&c=1&s=0)