Monash University is embarking on a drive to make it easier for researchers to visualise and process data captured from electron microscopes.

At the core of Monash's research infrastructure are two GPU-based supercomputers, MASSIVE-1 at the Australian Synchrotron research facility and MASSIVE-2 at Monash University in Clayton, which both went live in March 2012.

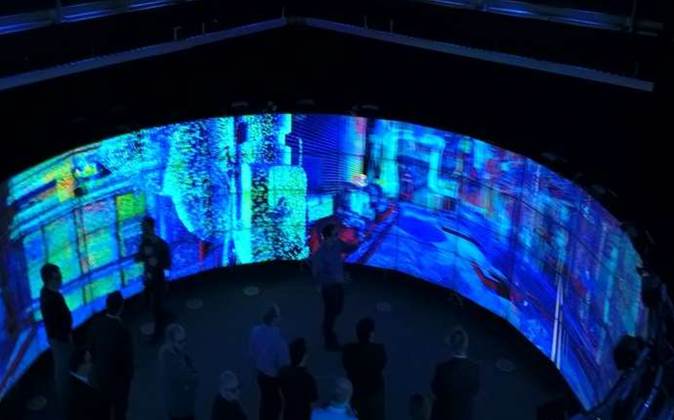

Accompanying the MASSIVE (Multi-modal Australian ScienceS Imagine and Visualisation Environment) supercomputers is the CAVE-2 data visualisation facility, rolled out two years ago.

CAVE-2 is a video wall of 80 46-inch 3D LCD panels, arranged as 20 four-panel columns, with each column powered by a Dell workstation.

The data visualisation facility features a combined total of around 84 million pixels across all its displays, along with 22.2 channel audio.

The manager of Monash University's visualisation program, David Barnes, told iTnews his team was looking at ways to easily visualise the data from an electron microscope - and which has been crunched in MASSIVE - in Cave.

"MASSIVE will typically be used to process images acquired from a scanner of some sort. That could be processing one great big image, or hundreds of images in a study," he said.

"The Monash research network is a fabric that's typically 10 gigabits per second, which we can then run into CAVE-2, because CAVE-2 is on the same network.

"So the question is: what do users do in MASSIVE that could be visualised in CAVE-2?"

The process

According to Barnes, many research projects across fields such as medicine, engineering, life sciences and geosciences begin with a researcher booking time on an electron microscope.

Data produced from scanners sourced from Monash Biomedical Imaging is put into a database called DaRIS and made available on a file-share on MASSIVE.

Information generated from electron microscopes flows through a pipeline to the TARDIS data storage system, while data produced at the Synchrotron is reconstructed on MASSIVE for researchers on a MASSIVE drive or put into TARDIS.

But Barnes said once the numbers have been crunched in MASSIVE, creating a 3D visualisation of the data in CAVE-2 requires manual filtering and post-processing.

"As it is, they have to talk to us, and then we have to transform their data, and then we have to invite them back," Barnes said.

"Now don't get me wrong, we like talking to people, but the process is a pain."

In order to make it easier for researchers to visualise their data, Monash is planning to automate the process.

"We're working on allowing users to immediately visualise images on MASSIVE in a web browser with webGL shading, get the colour and parameters right, and then they can come to the cave and immediately look at it in the cave," Barnes said.

"In pre-visualisation, you're setting up your shading, colours and representation of the data in a web-browser - possibly with a cut-down version of the data to fit the person's graphics card - and then being able to apply all of those shading options in CAVE-2 directly after a transfer of the data."

Improving the workflow

Barnes said some scientists will start noticing the change in the coming weeks.

"We're going to put that out in front of some selected test users in the next two weeks," he said.

"It's been prototyped. It's quite important to get a few things ironed out before we put that in front of users.

"But that will allow them to operate a folder of 2D images that we then stack into a 3D image and visualise for them, and they'll be able to look at it in CAVE-2."

The improved workflow should make it easier for researchers to make the most of the serious computational power of Monash's MASSIVE supercomputers.

At its launch, each cluster contained 42 Intel servers and 84 Nvidia GPUs, with each machine delivering a theoretical peak of 49TFLOPS.

Since then, MASSIVE-2 has been upgraded and now includes 1720 CPU-cores and 244 Nvidia GPUs across 118 nodes.

The operating system on both machines has also been upgraded from CentOS 5 to 6, while the original MOAB cluster workload management system has been replaced with SLURM.

Andrew Sadauskas travelled to Melbourne as a guest of Nvidia

_(23).jpg&h=140&w=231&c=1&s=0)

_(28).jpg&h=140&w=231&c=1&s=0)

iTnews Executive Retreat - Security Leaders Edition

iTnews Executive Retreat - Security Leaders Edition

iTnews Benchmark Awards 2026

iTnews Benchmark Awards 2026

iTnews Cloud Covered Breakfast Summit

iTnews Cloud Covered Breakfast Summit

The 2026 iAwards

The 2026 iAwards

_(1).jpg&h=140&w=231&c=1&s=0)