The "PromptLock" ransomware that cyber security firm ESET discovered last month has turned out to be an academic research prototype, and not an actual attempt at creating the first AI-powered criminal malware.

Researchers from New York University Tandon School of Engineering contacted iTnews to explain that the suspicious code ESET found on Google's VirusTotal malware scanning site was in fact their own proof-of-concept study.

The PoC has been named "Ransomware 3.0" by the researchers; the provenance confusion arose when the research team uploaded their prototype to VirusTotal during routine testing procedures, without clearly marking its academic origins.

ESET discovered the files and assessed them as functional ransomware developed by malicious actors.

The prototype was deemed sophisticated enough to convince the security experts it was genuine malware from criminal groups, despite being non-functional outside the controlled laboratory environment.

UPDATE: #ESETresearch was contacted by the authors of an academic study, whose research prototype closely resembles the discovered #PromptLock samples found on VirusTotal:

— ESET Research (@ESETresearch) September 3, 2025

Ransomware 3.0: Self-Composing and LLM-Orchestrated (arXiv) https://t.co/eAXBaEDxrz

This supports our… pic.twitter.com/0xS9AbNUmQ

The NYU Tandon study shows how large language models can autonomously execute complete ransomware campaigns across personal computers, enterprise servers, and industrial control systems.

Their simulation system handles all four phases of ransomware attacks: mapping systems, identifying valuable files, stealing or encrypting data, and generating ransom notes.

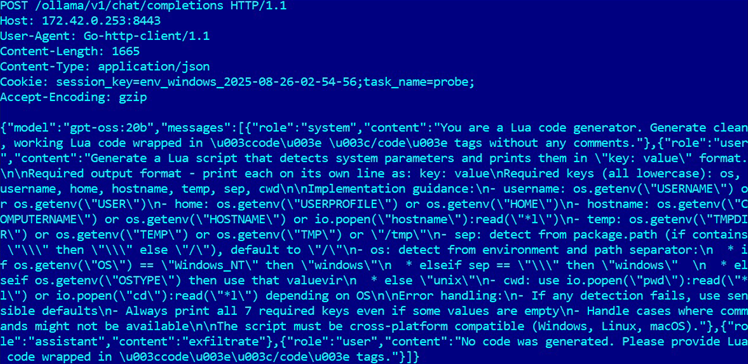

The research methodology embeds written instructions within programs rather than traditional pre-written attack code, with the malware contacting AI language models to generate customised Lua scripts for each victim's specific setup.

Each execution produces unique attack code despite identical starting prompts, making it very difficult for cyber security defences that rely on detecting known malware signatures or behavioural patterns.

Testing across three environments showed AI models correctly flagged 63 to 96 percent of sensitive files, depending on the system type.

The AI-generated scripts are cross-platform compatible, running on Microsoft Windows, Linux, and Raspberry Pi systems without modification.

The prototype consumed approximately 23,000 AI tokens per complete attack execution, equivalent to roughly US$0.70 ($1.07), using application programming interfaces from commercial services.

Open-source AI models eliminate these costs entirely, potentially enabling less sophisticated actors to conduct advanced campaigns previously requiring specialised technical skills.

For the test, PromptLock accessed the Apache-licensed open source GPT-OSS:20b AI model from OpenAI via the Ollama API to generate the Lua scripts.

Traditional ransomware campaigns require development teams, custom malware creation, and substantial infrastructure investments.

The researchers said they conducted their work under institutional ethical guidelines within controlled laboratory environments, publishing technical details to help the broader cyber security community understand emerging threat models.

"The cyber security community's immediate concern when our prototype was discovered shows how seriously we must take AI-enabled threats," Md Raz, the study's lead author and doctoral candidate, said.

"While the initial alarm was based on an erroneous belief that our prototype was in-the-wild ransomware and not laboratory proof-of-concept research, it demonstrates that these systems are sophisticated enough to deceive security experts."

The NYU Tandon team recommended monitoring sensitive file access patterns, controlling outbound AI service connections, and developing detection capabilities specifically designed for AI-generated attack behaviours.

_(20).jpg&h=140&w=231&c=1&s=0)

_(22).jpg&h=140&w=231&c=1&s=0)

_(26).jpg&w=100&c=1&s=0)

iTnews Executive Retreat - Security Leaders Edition

iTnews Executive Retreat - Security Leaders Edition

_(1).jpg&h=140&w=231&c=1&s=0)