.png)

While the core foundations of cloud infrastructure-as-a-service (IaaS) offerings are now more than 20 years old, we are still seemingly in the early stages of learning how to effectively and efficiently engineer cloud environments.

The initial adoption of cloud virtualisation in the 2000s was soon followed by the containerisation craze that kicked off in the mid-2010s, and then the adoption of serverless computing soon after.

These architecture models are dominant today, but the ways in which they and the applications they support are created and deployed have experienced massive change.

The concept of managing and provisioning infrastructure through software – often dubbed ‘infrastructure as code’ – is now commonplace, and thanks to increasing automation, has given rise to the follow-on idea of ‘infrastructure-from-code’, where the cloud infrastructure infers requirements from the applications that it runs.

Building on fast-moving foundations

According to Manju Bhat, vice president and fellow in the software engineering practice at Gartner, each evolutionary step in cloud engineering has come in response to limitations of the previous iteration, and that same driver will continue stimulating cloud engineering developments.

“All containerisation is built on top of virtualisation, so we can essentially treat virtualisation as a substrate of infrastructure today – I doubt many people are deploying applications directly on bare metal,” Bhat said.

“The de facto paradigm is virtualisation, and you layer containerisation on top. Then the containers become the engine for serverless in many cases.”

But that model has evolved further as organisations have sought greater automation to support the rapid delivery of code into the cloud.

“If you look at the last four or five years, there has been this concept of GitOps that has emerged,” Bhat said.

“If you look at the way application developers manage application code within source-code repositories, it asks ‘Why can’t infrastructure engineers or cloud engineers manage cloud infrastructure code within the same paradigm?’”

Another important development has been the introduction of generative AI tools into the engineering process

“Now generative AI can generate the necessary infrastructure for you,” Bhat said.

“This is coming to be known as ‘infrastructure-from-code’, where you don’t have dedicated DevOps engineers who write infrastructure code, but you (automatically) generate infrastructure code.”

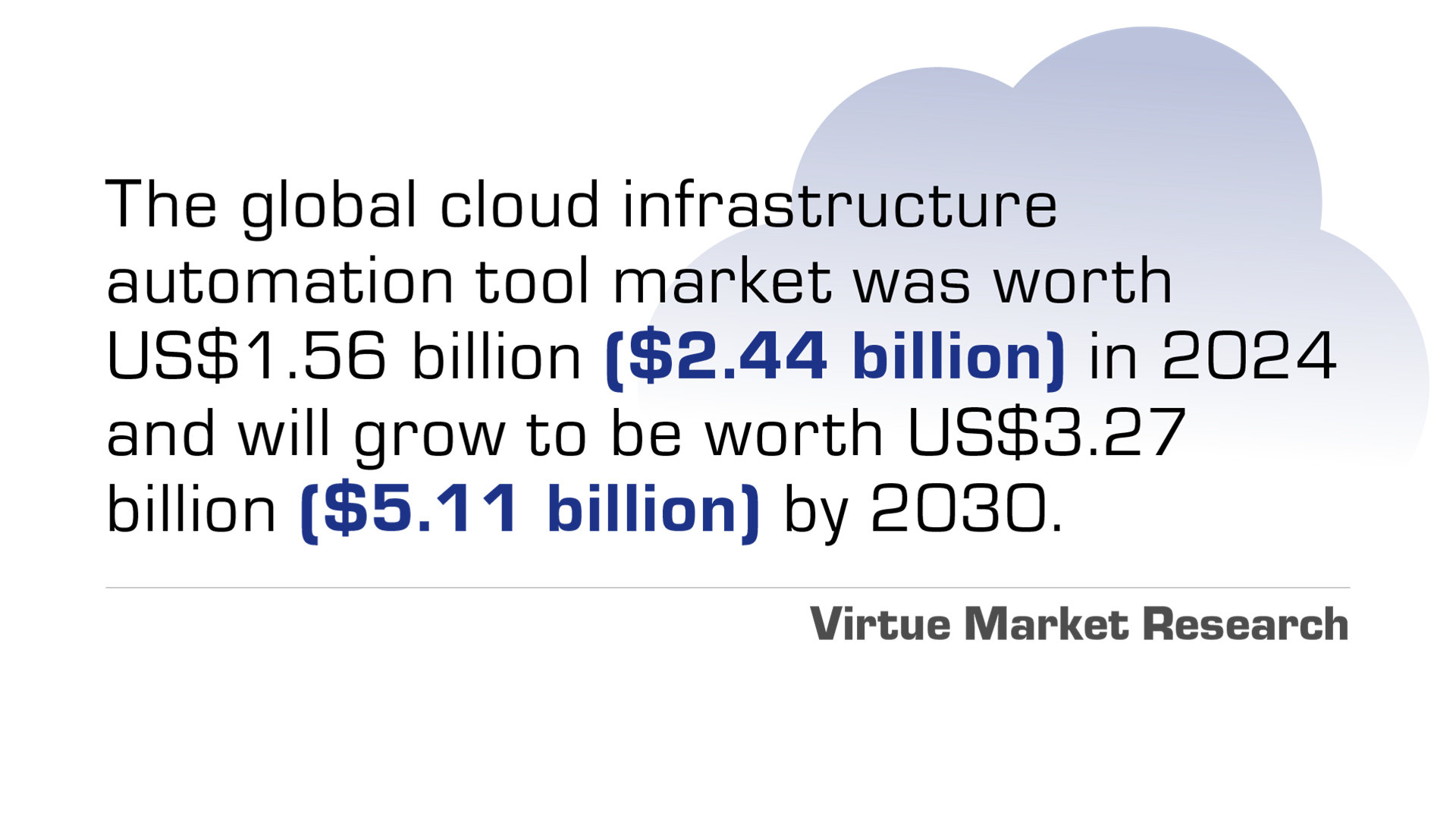

The full impact of this evolution within cloud architecture is difficult to pin down, but according to Virtue Market Research the broader global cloud infrastructure automation tool market was worth US$1.56 billion ($2.44 billion) in 2024 and will grow to be worth US$3.27 billion ($5.11 billion) by 2030.

Containerisation for simplification

At the diversified comparison platform company Finder, the drive toward simplicity and automation in cloud engineering has made containerisation the default approach for cloud development, as this allows services to run consistently across local environments, development, quality assurance, and production.

“Same code, same environment variables, just different security connectivity,” said Finder’s chief technology officer Joe Waller.

“Once we have done that then the quest becomes automating as much as we possibly can.”

This dedication to standardisation has enabled Finder to effectively clone its entire environment at will, through a service called Auto Local.

“You run one command from your terminal and then Auto Local will build a running version of Finder on the machine you are running it from,” Waller said.

“It will go and install all of the most appropriate packages, get the most recent code, and get the service up and running.”

“That has saved an enormous amount of time. The trick is making sure that it stays put together.”

This ‘trick’ manifests as a dedication to ensuring that any changes are properly documented so that automation within the platform isn’t broken.

“It is socially unacceptable to do something that breaks the automation,” Waller said.

A key aspect of that automation is Finder’s adoption of serverless functions across its cloud partners.

“The focus is on the code as the important thing, rather than how it is executed,” Waller said.

“We have a set of patterns that we use, and the engineers focus on the code that runs, and getting it into the appropriate repository, and then the automation takes care of the rest.”

Blurring the lines

Significant change is also occurring in the broader DevOps ecosystem, particularly with the rapid uptake of internal developer portals (IDPs) based on Backstage, an open-source framework which Gartner’s Bhat said dominated the market today.

“IDPs have become the storefront for your internal developer platform capabilities,” Bhat said.

“They become the window to accessing a lot of the platform capabilities.”

For Peter Wolski, general manager of reliability and security at MYOB, the main game now was ensuring that cloud developers could become productive quickly, and that meant abstracting more and more infrastructure responsibility.

He said a key consideration here was the provision of standardised tools, to ensure consistency in development activity, but with some leeway for experimentation when developers saw the opportunity to deliver new value.

“We want them to share their stories of what they did and what they have learned, what the trade-offs were that they made, and what they would do differently,” Wolski said.

“That provides the engineering organisation with a feedback loop around trialling some of those things so others can build on that knowledge.

“We want to empower teams to make decisions that only impact them.”

For Finder, the development of Auto Local and the use of Terraform to move code into production has alleviated the need for an additional IDP solution. Standardisation is achieved through use of a tech menu that allows cloud developers to choose from a range of tools without potentially breaking Auto Local.

For example, Finder standardised its use of linters (code analysis tools) but allowed more freedom in the choice of text editors, although Waller said there was a definite push to have developers adopt the AI-based code editor Cursor.

Accelerated delivery

This dedication to process and standards enabled Waller to ensure that code deployment was keeping pace with expectations.

“By standardising how you build your service you are making your technology platform like LEGO,” Waller said.

“We measure how many go-lives we can deploy over a given period, and how much change we can get out on a group basis and on a per-engineer basis, and how we can improve that.

“By measuring the different pace of productivity, certain things become self-evident, such as fallow time when waiting for deployments to go onto a dev environment.”

Examining the production pipeline in this way enables Waller to tune activities to ensure that engineers are only doing the essentials, and therefore, maximising their productivity. This has also given Waller a strong understanding of the role that AI tools such as Cursor could play in accelerating code delivery.

“AI is going to disrupt everything,” Waller said.

“I can see a big increase in productivity – it might be as much as two times from the measurements I’ve made. So, I’m not signing up any long-term contracts right now, because everything is being disrupted all the time.”

Cloud Covered Champion

We are proud to present this year's Cloud Covered champion, and showcase the work they do.

.png)