Each time researchers at the Queensland Brain Institute build a 3D map of a living organism they create another 30 gigabytes or so of data.

As real-time brain imagery gets better, faster, and bigger, the QBI is being forced to invest in some pretty serious IT kit to keep up.

QBI’s microscopy facility manager Luke Hammond took iTnews inside the fast moving research enterprise.

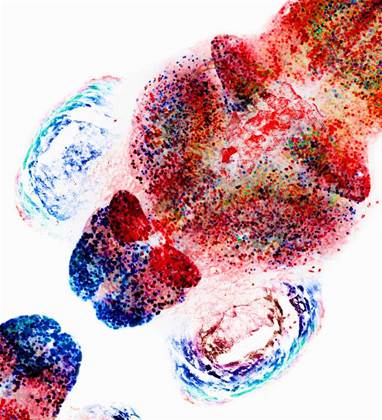

He said recent advancements in both the speed and sensitivity of imaging equipment meant that researchers can see far more of the brain than ever before, and at far greater speed. They can now visualise the brains of animals, observing their neural activity at a cellular resolution while they are still alive.

"By observing how neurons connect and communicate we can learn how the brain processes information. Essentially, this work provides a window into to consciousness,” he said.

“It is this rapid generation of image data and the need to combine, visualise and analyse huge 3D images that is driving the demand for computational hardware and knowledge.”

For example, imaging the brain of a live Zebrafish using something called a spinning disk confocal generates about 30GB each time around, and Hammond hopes that soon enough it will be something scientists will be able to repeat in less than a second.

Really big data processing in real time

QBI’s senior IT manager Jake Carroll told iTnews the computational challenges posed by processing that research data – especially in real time – are immense.

“If you can imagine or visualise all of that, the floating point capability as well as the disk I/O credentials that are required to absorb that much data in a second, and do it in real time, it is a fairly serious undertaking in engineering,” Carroll said.

“We’re not dealing with standard resolutions or high-definition content anymore – we’re dealing with resolutions of hundreds of thousands by tens of thousands of pixels at a time.

“When you’re doing that in a very high colour depth and in ‘z-stacks’ or with any kind of large proportionality, you need significant floating point memory and GPU capability at hand otherwise it’s almost insurmountable.

“You also need a reliable platform and software framework in front of that from a full stack development perspective, or else your wonderful hardware design isn’t of any value, or won’t work as efficiently as it could.”

According to Carroll, the techniques QBI uses to process at that scale relies on continuously improving hardware design and algorithms.

“GPU has become a big deal, and tightly-connected, high bandwidth, low latency storage is important. On the storage front, flash/NAND based architectures have become paramount to our workloads, although not the kind most enterprises are accustomed to,” he said.

“I refer to PCI-E connected nVME technology, because nothing else is low-latency or high throughput enough.”

Massive on-site compute

In order to process all that data, QBI has assembled some heavy-duty on-site compute capabilities, which include 19,968 Nvidia K80 cores for high performance computing, and around 7 petabytes of storage.

“Agility and cutting edge capability counts in this space. Being able to react to niche requirements and business drivers when you’re dealing with esoteric hardware/software platforms is sometimes more difficult in commodity or utility cloud computing environments,” Carroll said.

“It isn’t always an answer or instant enabler to go straight to a cloud-centric generic hardware approach."

According to Carroll, QBI’s impressive list of on premises compute capabilities include:

- 1800 HPC cores in the form of Xeon E5 V2 and V3

- Multiple GPU arrays that include 19,968 Nvidia K80 GPU cores

- Dedicated big-memory nodes for unusual workloads paired with GPU-based deconvolution applications

- Dedicated mass storage systems for archival and file serving storage requirements which leverage Oracle’s Hierarchical Storage Manager (Oracle HSM) technologies

- Around 7 petabytes of Oracle HSM, including flash, high-speed disk, NL-SAS disk, and enterprise tape technologies that utilise industry best practice in data integrity verification and validation standards

Work with QRISCloud and NeCTAR

Even with this computational backing, the QBI team is also seeking support from the cloud, in the form of the federated NeCTAR research infrastructure and the QRISCloud.

"We don’t believe we are or can be an island. We are an organisation that works with our sisters and brothers in the UQ and the wider research community to do what we do,” Carroll said.

Carroll said that, depending on research project, there is no reason why QBI couldn’t eventually burst into compute capabilities hosted in the cloud.

In such situations, the University of Queensland’s MeDiCi (metropolitan data caching infrastructure) storage fabric, recently prototyped by the university’s research computing centre, is likely to help.

“As things develop we could move certain workloads that suit into the QRISCloud and use the services of the research computing centre (RCC) as well to augment our capability. It really depends upon the workload and practicality of data movement compared to the locality of the data generating instrument,” Carroll said.

Moving faster

The QBI is currently preparing to install new imaging equipment that will slash the time it takes to create a 3D snapshot of an entire brain in a living creature down to under a second.

This will allow the researchers to monitor what happens inside an animal in close to real time.

“We’ve had the instruments for doing this [3D imaging] research for around three years. The technology has really been improving rapidly during those years, and while we are currently imaging the brains in live animals, we are already building a new imaging system that will allow us to do so exponentially faster,” Hammond said.

“Internationally, there’s a few of these systems coming online in the US and Europe, and there’s a few experimental systems within Australia, but QBI will be the first to be doing this in Queensland.”

For Carroll, this will mean making sure QBI’s computer science can keep pace with the frontiers of neuroscience.

“When you’re building bleeding-edge microscopy equipment, you have to keep up by building bleeding-edge computing infrastructure as well, and that’s an interesting place in itself, because it leaves room for application-specific and interesting custom silicon scenarios,” Carroll said.

According to Hammond, the long term implications of this revolution in neuroscience are profound.

“It’s a really exciting time for neuroscience. For the first time we can image a whole living brain at cellular resolution fast enough to observe neural activity,” Hammond said.

“So all these questions that until now have been out of our reach – like ‘how does a brain process visual information’ – well, now we can answer a question like this because we can now see the neurons firing in real time.

“We can then use these images to generate new algorithms based on how a real brain functions, and apply those lessons to things like deep learning and machine learning.”

_(28).jpg&h=140&w=231&c=1&s=0)

_(33).jpg&h=140&w=231&c=1&s=0)

iTnews Executive Retreat - Security Leaders Edition

iTnews Executive Retreat - Security Leaders Edition

iTnews Benchmark Awards 2026

iTnews Benchmark Awards 2026

iTnews Cloud Covered Breakfast Summit

iTnews Cloud Covered Breakfast Summit

The 2026 iAwards

The 2026 iAwards

_(1).jpg&h=140&w=231&c=1&s=0)